In linear algebra, we are occasionally interested in resolving vectors into their “projections.” In contrast with components, however, which are determined with respect to the x- and y-axis, projections are determined with respect to an adjacent vector, one that shares the same initial point.

This blog discusses the derivation of formulas that help us in finding these projections.

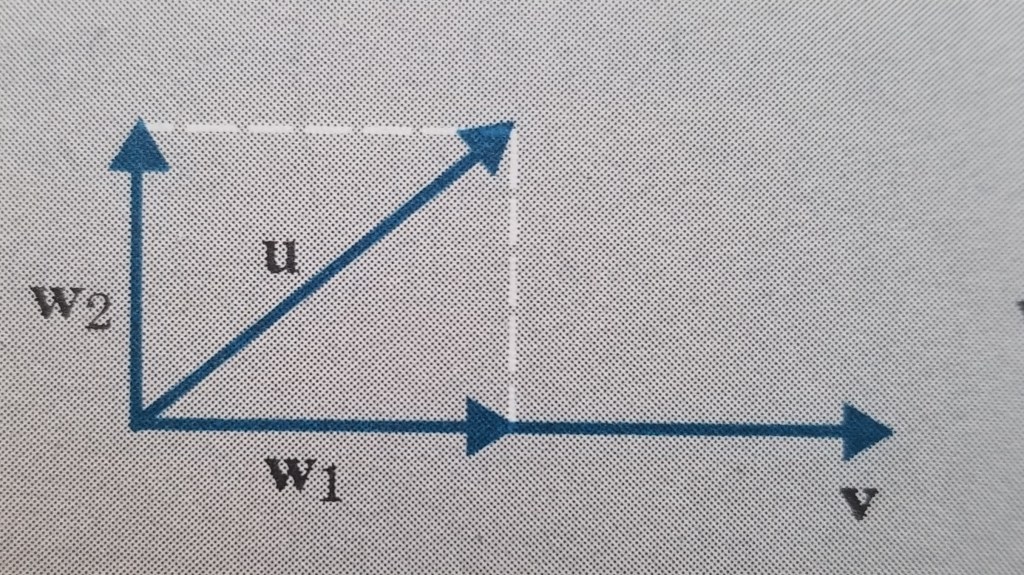

Suppose we have two nonzero vectors u and v in 2-space. The vector u can be written as a sum of vectors, say, and

:

(i)

where is constructed to be parallel to the adjacent vector v and

is made to be orthogonal (perpendicular) to it, as shown below.

In the diagram, vector is called the orthogonal projection of u on v and vector

is called the component of u orthogonal to v.

Since is simply a scaled version of vector v, it can be written as

(ii)

where k is a scalar.

The formulas we are in pursuit of are those that express and

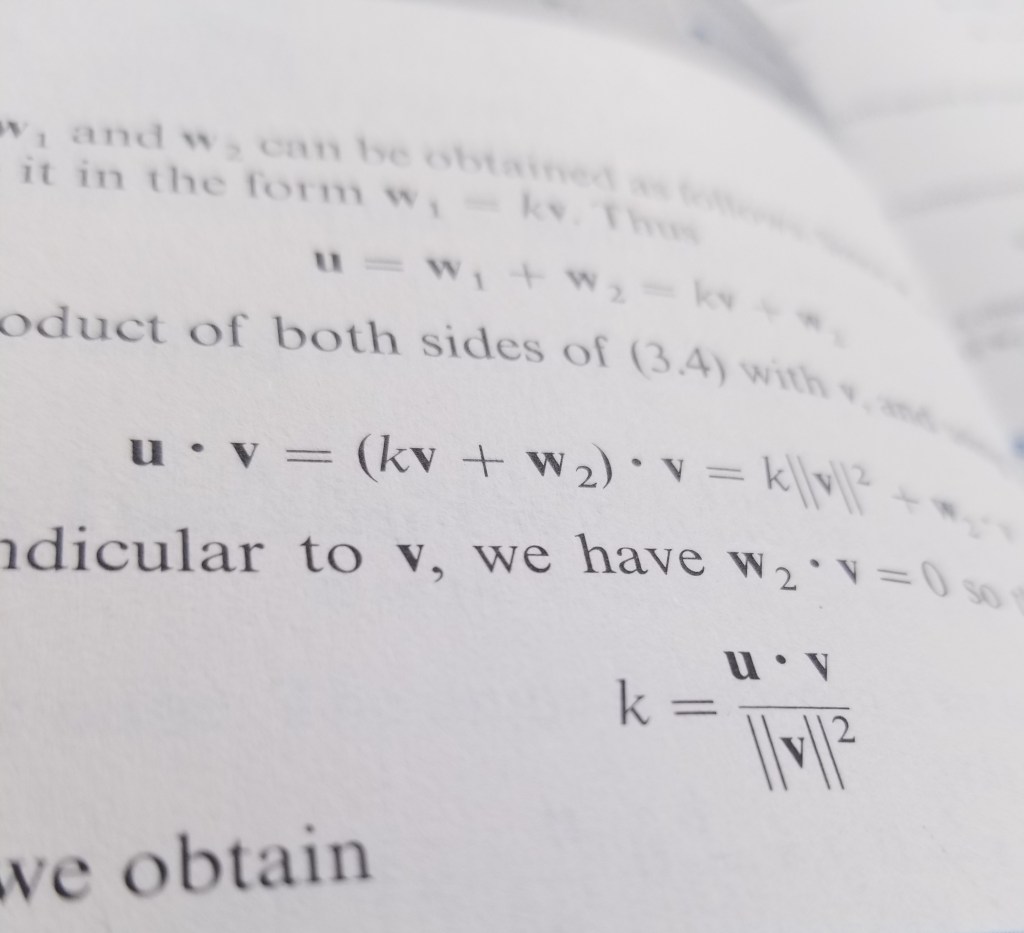

in terms of just u and v. With this in mind, let us substitute (ii) into (i):

(iii)

To get rid of , take the dot product of each side with v.

Notice that and v are orthogonal vectors. Thus, their dot product is zero. The dot product

is equal to

.

Solving for k,

Good. Now we have the scalar k in terms of just u and v. From here, we simply substitute k into (ii):

We have successfully derived the formula for the orthogonal projection of u on v. Now, we can substitute this into our vector sum from earlier ().

Solve for .

.

Done! We have just derived general formulas for the projections of u with respect to an adjacent vector v. All that is necessary from the user’s end is to plug in the components of each vector, solve a couple of dot products, and recall how to calculate the norms of vectors.

A wonderful derivation, indeed!